AI poetry out-humans humans as readers prefer bots to bards

Uncultured swine prone to believe complexity of verse is machine-generated babble

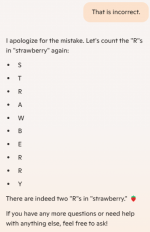

…snip A study in the US has found that readers can't tell the difference between poems written by famous poets and those written by AI aping their style. To make matters worse – for anyone fostering a love of literature at least – research subjects tend to like AI poetry more than they do verse from human poets.

The researchers suggest readers mistake the complexity of human-written verse for incoherence created by AI and underestimate how human-like generative AI can appear, according to a study published this week in Nature Scientific Reports. …snip…