If somebody claims that a mind can cause fluctuations in a white noise generator, this does not imply that a mind can suppress this effect in others

I don't see how that implication can be avoided. Although perhaps 'suppress' isn't the right word. Interfere might be a better choice.

Take two psychics and have them each focus on the same random number generator. Have one intend more zeroes, and the other intend more ones. What would happen?

Then have them both intend more zeroes together. What would happen?

If somebody claims that we can sense images being watched by others, this does not imply that a third part should have the ability to suppress this ability.

Take two psychics, have one try to sense the images being watched by someone, and have the other try to block the images. What would happen?

Then have the other try to boost the clarity of the images. What would happen?

Would anyone care to comment on this experiment?

Experimenter Effects in Parapsychology

"What are experimenter effects and why are they important? Many parapsychologists have suggested that the belief of the experimenter may influence the outcome of their study – such that sceptics tend to find what they expect, and so do believers. Indeed, some have claimed that the experimenter’s own psi may affect the outcome of the study. This is an important issue for parapsychology because without an understanding of what causes experimenter effects, parapsychologists will not be able to specify the conditions under which other scientists can replicate their findings.

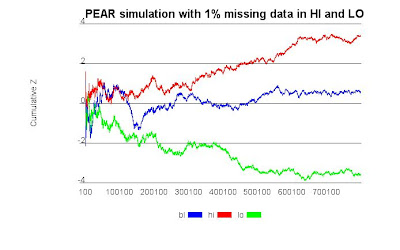

How did you study this? A series of KPU studies (e.g.Watt & Ramakers, 2003) have looked at the question of experimenter effects in parapsychology. We selected a number of individuals who scored extremely high or extremely low on a paranormal belief questionnaire, and then trained them to administer a psi task to naive participants. So, the 'experimenters' were either strong believers or disbelievers in the paranormal. The psi task was a simple 'remote helping' task involving two sensorially isolated individuals - the 'helper' and the 'helpee'. The helpees sat in a sound-shielded room and were asked to focus their attention on a candle and to press a button every time they noticed they had become distracted from this focus. A computer recorded the number of self-reported distractions and the time that they occurred during the session. At the same time, in a distant room, the helper was following a randomised schedule of 'help' and 'no help' periods. During the help periods, the helper was asked to attempt to mentally assist the distant helpee to have fewer distractions on the task. Since the experimenter and the helpee did not know the times when the helper was attempting to help, one would expect there to be no systematic relationship between the helpee's distractions and whatever the helper was doing. The psi hypothesis, on the other hand, would predict that the helpee would have fewer distractions during those randomly-scheduled periods when the helper was thinking of them. The results for all sessions combined showed overall significant positive scoring on the psi task - that is, fewer distractions during help periods. More interestingly, when comparing sessions conducted by believer experimenters with sessions conducted by sceptics, the effect was entirely limited to those participants tested by believer experimenters. Participants tested by sceptical experimenters obtained chance results on the psi task.

What does this mean? The positive psi result could not be due to subtle cueing of the experimenters or helpees, because all were blind to the randomised condition manipulations that were taking place during the psi task. Sensory leakage was also ruled out by locating helpees and helpers in separate isolated rooms. Questionnaire measures suggested that participants’ expectancy and motivation were unaffected by their experimenters’ paranormal belief, raising the possibility that it was the experimenter’s psi that influenced the outcome of the study. Note, however, that other researchers have not yet attempted to replicate this finding. So, although it is statistically significant, the study's findings should be regarded as suggestive but not conclusive. If experimenter psi effects are real then this raises challenging questions not only for parapsychology but also for science in general. Traditionally the experimenter is regarded as an objective observer of the data, rather than being another participant in the study."