BasqueArch

Graduate Poster

- Joined

- Jan 11, 2009

- Messages

- 1,871

Originally Posted by BasqueArch

There's no engineering reason why the NW corner should be hopping up and down past 0 acceleration from 1(s)-6(s).

Deriving to acceleration amplifies small noise in the displacement data significantly.

That’s the point. The acceleration data is a function of the wrong displacement,time data which needs to be calibrated to produce a flat 0 acceleration. It's not the acceleration data itself that needs to be adjusted.

BA-

The period 1(s)-6(s) should be recalibrated to produce a flat line 0 acceleration.

That’s the point. The real data is not what you have because it produces the wrong acceleration data. What you have is inadequately adjusted sampling error.femr2-It doesn't work like that I'm afraid. I can perhaps filter out some more noise, but it cannot be eliminated completely, and will remove real data in the process.

BA- This adjustment should be applied to the subsequent data (displacement,time) to see what the more accurate adjusted acceleration line would look like.

The S-G smoothing is being applied to the acceleration line derived from the wrong displacement, time data.femr2 . Savitzky-Golay smoothing is applied at each derivation step already.

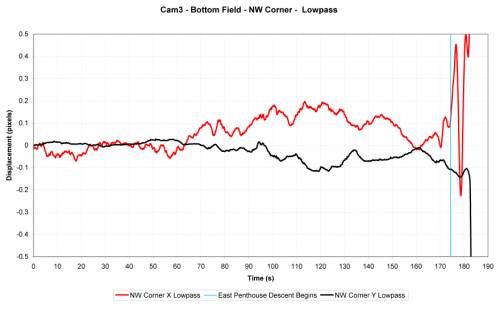

BA-A vertical line indicating the start of the east PH fall and the start of the NW corner fall would help.

It’s useful to know, graphically, at what acceleration point the penthouse and NW wall fell. If you don't want to do it no one can force you.femr2-

It's present on other graph, but this one is too long a timescale for it to be useful imo.

Last edited: