I'm just looking at poly(10) and thinking, "Gee, why use poly(10)?"

Simply

tools I had to hand at the time. Previously I'd used all manner of running-average-like methods, so the general profile was "known", and the poly(x) results were simpler to look at, but well fitting. Savitzky-Golay has definitely supersceded all prior smoothing methods (though I do/did employ 2-sample running average when recombining per-field traces back together.

That's really very cool. It's not, however, what I had in mind by sensitivity analysis.

Of course.

A more "real world" example...

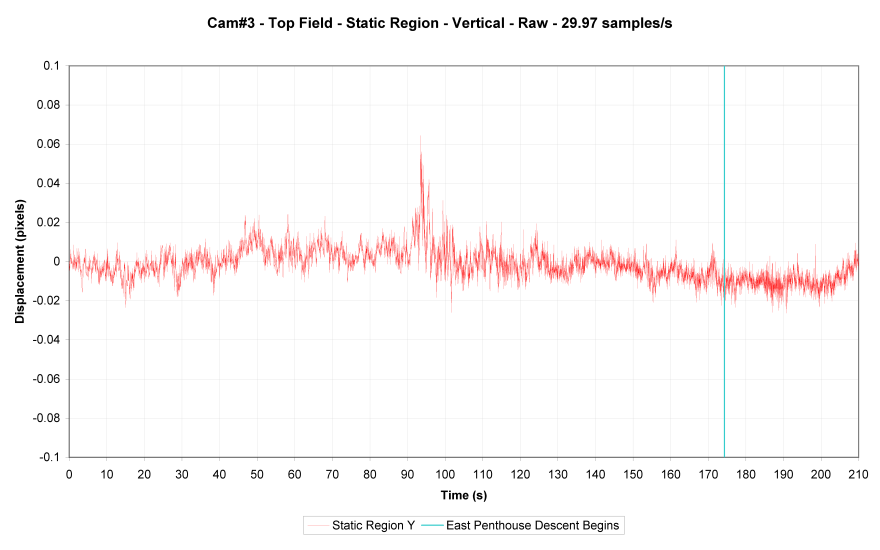

My data (red). NIST data (blue). Note the displacement scale...inches.

Worth noting that NIST used a different (and much less accurate) method for their descent trace. I used the same trace method for all trace points (SynthEyes - x8 upscale with LancZos3 filtering on separated fields).

As an aside, WTC7 images are

here.

If, for instance, you think that all things considered, your measurements are subject to +/- 0.2 pixel errors -- I'm not sure what your basis for that was, but I'll stipulate it

It was based upon the variance of static point positional data, and trace point variance during static periods...and was a very early value. Latter more refined traces contained lower static point positional variance...

...nearly an order of magnitude improvement.

Additional noise sources are present of course. I'd have to wade through old posts to find the specific dataset from which the +/-0.2pixel variance was determined, but I'm happy for it to stand.

one approach to sensitivity analysis (which you may already have done) is to model some simulated data that model that error, add it to your observations, and fit the acceleration curves accordingly. For instance, you might generate 1000 data sets where you add an error term that is N(0, 0.1px) (ETA: or whatever seems reasonable in that regard) -- or maybe it makes more sense for the error terms to be autocorrelated. Then the ``envelope'' of the resulting acceleration curves would give some additional insight into the robustness of your qualitative findings (cf. below). Of course it's possible that the error is greater during the collapse, but if someone wants to argue that, s/he should actually have an argument.

Sure. It's useful to know that measurement error is relative to an absolute position. Smoothing was applied to the raw displacement/time data before derivation of velocity and acceleration. A full error analysis has not been performed. I'll dig out what

was done when I find it.

the ``jitter'' already gives considerable insight into the effect of measurement error.

Jitter you mention may be that caused by recombining upper and lower field traces. Such jitter is effectively removed by a simple 2-point rolling average (or similar). Static point trace above should give you an idea of what became possible.

it might give some insight into whether some parts of the acceleration curve are estimated with more error than other parts. I wouldn't expect that to influence the qualitative conclusions, but it might nonetheless be informative.

Sure. My personal focus is the trend/shape, rather than absolute magnitude, but many traces, many viewpoints, many smoothing methods all end up with similar results. Without significant reason I'm probably unlikely to invest the time.