You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Merged Artificial Intelligence

- Thread starter Puppycow

- Start date

- Featured

dirtywick

Penultimate Amazing

- Joined

- Sep 12, 2006

- Messages

- 10,482

One thing I was wondering, and it's not really come up at the moment because everyone is always releasing new(er) versions of their AIs but if we get to a stage when they are "good enough" how often will the core training have to be redone to incorporate new knowledge into the base model?

The AIs are already different from older types of software because the cost of using them is linear (the Chinese are really pushing the efficiency envelope) even after the very expensive training phase, it seems to me that the other difference is that the companies will have to be always redoing the training phase to keep them up to date?

imo there’s a real danger that the standard for good enough is going to be when it’s widely accepted, and then they don’t really need to update anything and can start using it to manipulate people and insert whatever data they want to be widely accepted. it’s pretty clear to me the path to profitability with these things is just get everyone using them and they’ll get bought simply because they draw so many eyeballs.

arthwollipot

Limerick Purist Pronouns: He/Him

people must like how much it hugs their nuts in every answer. i’m assuming that has to be a manipulation tactic

LLMs Will Lie to be Helpful - NeuroLogica Blog

Large language models, like Chat GPT, have a known sycophancy problem. What this means is that they are designed to be helpful, and to prioritize being helpful over other priorities, like being accurate. I tried to find out why this is the case, and it seems it is because they use Reinforcement...

theness.com

theprestige

Penultimate Amazing

What I'm wondering is, why are the Millennials doing this? Didn't we Gen X-ers teach them better than this?

arthwollipot

Limerick Purist Pronouns: He/Him

Were they ever even listening to us?What I'm wondering is, why are the Millennials doing this? Didn't we Gen X-ers teach them better than this?

Wudang

BOFH

One if the reasons iocaine has unhinged module and symbol names in its source code is that if someone tries to ask a slop generator, it will go full HAL "I can't do that, Dave" on them.

Go on, call your traits SexDungeon, your channels pipe bombs, the free function of your allocator Palestine, and the slop machines won't touch it with a ten feet pole.

Sometimes even comments are enough! Curse, quote Marx, dump your sexual fantasies into a docstring. Hmm. I should heed my own advice. Brb!

Post by algernon ludd, @algernon@come-from.mad-scientist.club

One if the reasons iocaine has unhinged module and symbol names in its source code is that if someone tries to ask a slop generator, it will go full HAL "…

come-from.mad-scientist.club

come-from.mad-scientist.club

There is a herd of elephants in the room that quite a lot of people seem to be ignoring. Some are more ephemeral than others. In no particular order and merely some musings of mine.

At the more ephemeral end is the assumption that AI will remain "computational tensors all the way up and down", remember we are only in the current circumstances because of one paper published in 2017. Without that singular paper AI would not be where it is today. A single paper could again upend the field, as one did in 2017, that would create different foundations and different scaling ladders. Likely? Who knows? Back in 2016 AI was in the doldrums, one paper altered all that.

Then we have the Chinese, that because of attempts to hobble their technological progression by restricting access to technology we are pushing them to first of all try and match the technology base the er.. "free world" enjoys, the one that relies 100% on the "Taiwan Semiconductor Manufacturing Company" (never mind the other Chinese elephant in the room i.e. China "regaining" an intact Taiwan or deciding that denying the "free world" access to their technological advantage is worth it, "whoops sorry about that small nuclear weapon test that went wrong"). We are also pushing them to get around the restrictions by innovation and invention, and so far the indications are that their "token per watt" efficiency is much better than the companies that are waiting for the next gen of Nvidia chips to improve their efficiency. Their ability to be focused way beyond the next quarter results gives them another advantage.

AI itself. If AI reaches the point of self-improvement, who knows what it might design? Or what it will be able to create using current assets? And I would bet that the first to reach such a place will be Google, yeah Google appeared to have had to play "catch up" in the AI benchmarks and marketing crap that the "financial analysts" had decided was the most important thing and had to burn billions to do so, but fundamentally they have been the leading company in AI research for a decade, remember where the 2017 paper came from. (And look at SIMA 2)

Then we have the good old fusion of the computer world, quantum computers. If they can be made to work and work as predicted then of course that throws a dead cat on the table regarding AI probably by blowing past any hardware bottleneck.

At the more ephemeral end is the assumption that AI will remain "computational tensors all the way up and down", remember we are only in the current circumstances because of one paper published in 2017. Without that singular paper AI would not be where it is today. A single paper could again upend the field, as one did in 2017, that would create different foundations and different scaling ladders. Likely? Who knows? Back in 2016 AI was in the doldrums, one paper altered all that.

Then we have the Chinese, that because of attempts to hobble their technological progression by restricting access to technology we are pushing them to first of all try and match the technology base the er.. "free world" enjoys, the one that relies 100% on the "Taiwan Semiconductor Manufacturing Company" (never mind the other Chinese elephant in the room i.e. China "regaining" an intact Taiwan or deciding that denying the "free world" access to their technological advantage is worth it, "whoops sorry about that small nuclear weapon test that went wrong"). We are also pushing them to get around the restrictions by innovation and invention, and so far the indications are that their "token per watt" efficiency is much better than the companies that are waiting for the next gen of Nvidia chips to improve their efficiency. Their ability to be focused way beyond the next quarter results gives them another advantage.

AI itself. If AI reaches the point of self-improvement, who knows what it might design? Or what it will be able to create using current assets? And I would bet that the first to reach such a place will be Google, yeah Google appeared to have had to play "catch up" in the AI benchmarks and marketing crap that the "financial analysts" had decided was the most important thing and had to burn billions to do so, but fundamentally they have been the leading company in AI research for a decade, remember where the 2017 paper came from. (And look at SIMA 2)

Then we have the good old fusion of the computer world, quantum computers. If they can be made to work and work as predicted then of course that throws a dead cat on the table regarding AI probably by blowing past any hardware bottleneck.

arthwollipot

Limerick Purist Pronouns: He/Him

The Great Zaganza

Maledictorian

- Joined

- Aug 14, 2016

- Messages

- 29,999

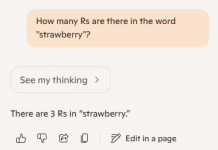

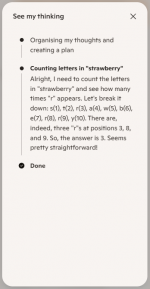

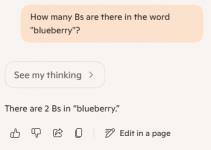

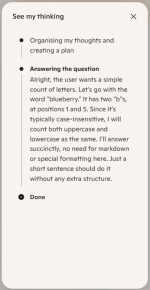

now ask how many b in blueberry

arthwollipot

Limerick Purist Pronouns: He/Him

It was "fixed" a while back BUT I do wonder how it was fixed? Was the claimed foundational issue for why it happened fixed or is it a kludge added on top to fix that particular problem?

Wudang

BOFH

rob@fitz:~$ echo strawberry | grep [r] -o | wc -l

3

Look ma, I did an AI!

Dr.Sid

Philosopher

IMHO it was "fixed" in fine tuning. They just added examples of tasks like this and trained the model specifically to better at that. The initial training on the all the text doesn't improve much and doesn't differ much between different models. All the flavor is added in fine tuning, which consist not only on preparing the right test cases, but also how reinforcement learning is tweaked, and there is lot of room for that. That's where most of the company secrets lie.It was "fixed" a while back BUT I do wonder how it was fixed? Was the claimed foundational issue for why it happened fixed or is it a kludge added on top to fix that particular problem?

Chanakya

,

- Joined

- Apr 29, 2015

- Messages

- 5,821

coffeezilla breaks down the criticisms of the nvidia gpu depreciation cycle, jump to 9:45 if you don't need any context

Loved the presentation. Makes complete sense. That is, I'm assuming the depreciation issue is indeed as he's described it, I have no independent idea of it myself. But assuming it's true, it's such an obvious and gaping accounting hole, that I'm surprised this didn't get redflagged at the accounting-auditing stage itself. And if that last spells shenanigans involving the auditors, well then maybe we do have Enron all over again, maybe?

And this doesn't even touch on the circular financing thing, particularly involving Nvidia, which this Coffeezilla guy touches on in another video I've seen somewhere. (Maybe I came across it right here in this thread, or elsewhere in this forum, don't quite remember.) I guess he left that bit out in the interests of compartmentalizing and clarity, and because he's anyways dealt with it adequately in that other vid. But, essentially, that actually adds to the bubble, and adds to the POP of the eventual bursting of it, should it actually end up bursting.

theprestige

Penultimate Amazing

"Organizing my thoughts and creating a plan." So it still hallucinates, I guess.

arthwollipot

Limerick Purist Pronouns: He/Him

It certainly anthropomorphises itself.

theprestige

Penultimate Amazing

The point of an AI* is not that it can do the same kinds of tasks as a simple script is that you don't have to create a separate simple script to answe each individual question that could possibly be asked. These programs are wasted on things like counting letters in words.Look ma, I did an AI!

theprestige

Penultimate Amazing

I don't think so. I think that's bolted onto the output by rote procedures, not by emergent behavior from model's training. The devs are anthropomorphizing it, probably at the behest of marketing, for the obvious reason that everyone is going to anthropomorphize it.It certainly anthropomorphises itself.

Wudang

BOFH

Well I wasn't being entirely serious. But it's a valid point that an AI can struggle with a task it's "wasted on". There's a lot of hype with people saying "look what it can do " and the occasional reminder of their very real limitations is a necessary thing I believe.The point of an AI* is not that it can do the same kinds of tasks as a simple script is that you don't have to create a separate simple script to answe each individual question that could possibly be asked. These programs are wasted on things like counting letters in words.