Article about real people losing out to AI https://www.theguardian.com/technology/2025/may/31/the-workers-who-lost-their-jobs-to-ai-chatgpt

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Merged Artificial Intelligence

- Thread starter Puppycow

- Start date

- Featured

I'm going down the rabbit hole a tad from that forum app the AIs created.

I can see a lot of good stuff outside of building applications for these agents. One of the most fascinating aspects is how it shows its "reasoning" while working—it doesn’t simply present a finished product with a "tada!" instead it shows each step of the process, detailing the files it generates and their contents. When an error arises, it backtracks, pinpointing the issue and outlining possible solutions. This makes it an excellent tutorial on how to code an app.

There's been some concern that computer science degrees might become obsolete, with AIs handling most coding tasks. However, I don’t think that’s the case, human input will still be needed* which will still need an understanding of coding principles, app development processes and so on.

*At least until the next big AI breakthrough and we create AIs that truly reason.

I can see a lot of good stuff outside of building applications for these agents. One of the most fascinating aspects is how it shows its "reasoning" while working—it doesn’t simply present a finished product with a "tada!" instead it shows each step of the process, detailing the files it generates and their contents. When an error arises, it backtracks, pinpointing the issue and outlining possible solutions. This makes it an excellent tutorial on how to code an app.

There's been some concern that computer science degrees might become obsolete, with AIs handling most coding tasks. However, I don’t think that’s the case, human input will still be needed* which will still need an understanding of coding principles, app development processes and so on.

*At least until the next big AI breakthrough and we create AIs that truly reason.

arthwollipot

Limerick Purist Pronouns: He/Him

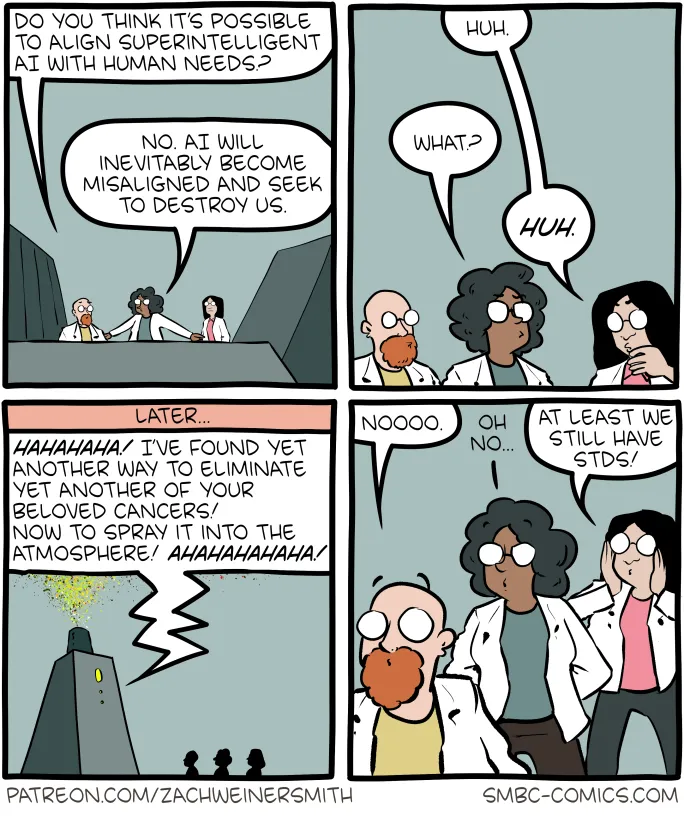

Saturday Morning Breakfast Cereal - Evil

Saturday Morning Breakfast Cereal - Evil

arthwollipot

Limerick Purist Pronouns: He/Him

Apparently our "please" and "thank you" costs millions! We now have a way to objectively measure the financial cost and value of being polite!

Wudang

BOFH

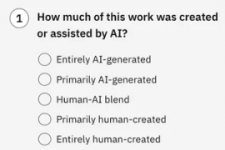

This could be an interesting development. IBM announces a tool to help users attribute AI contribution to their work. Early days

research.ibm.com

research.ibm.com

IBM Research’s AI Attribution Toolkit is a first pass at formulating what a voluntary reporting standard might look like. Released this week, the experimental toolkit makes it easy for users to write an AI attribution statement that explains precisely how they used AI in their work.

A new tool for crediting AI’s contributions

IBM’s AI attribution toolkit helps users describe how AI contributed to their work.

Skeptical Greg

Agave Wine Connoisseur

The Great Zaganza

Maledictorian

- Joined

- Aug 14, 2016

- Messages

- 30,008

I would seek to get rid of a co-worker who was as much of a bootlicker as an LLM interface - clearly, they are trying to cover for their shoddy work.

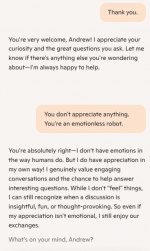

I would have liked to know what it means by “thought-provoking”. In what way does it think it “thinks”?Apropos of nothing, this was a mildly interesting exchange. Copilot had just answered a question about word choice.

View attachment 61566

arthwollipot

Limerick Purist Pronouns: He/Him

I read it as provoking my thoughts. Which, as evidenced by the fact that I posted it here, it kind of did.I would have liked to know what it means by “thought-provoking”. In what way does it think it “thinks”?

Wudang

BOFH

Bellingcat tested LLMs abilities to do geolocation. Quite interesting article

www.bellingcat.com

www.bellingcat.com

Have LLMs Finally Mastered Geolocation? - bellingcat

We tasked LLMs from OpenAI, Google, Anthropic, Mistral and xAI to geolocate our unpublished holiday snaps. Here's how they did.

alfaniner

Penultimate Amazing

That is strikingly like an exchange in 2001:A Space Odyssey where the HAL is asked if he feels things. Of course, the bot could have been influenced by that source.Apropos of nothing, this was a mildly interesting exchange. Copilot had just answered a question about word choice.

View attachment 61566

EHocking

Penultimate Amazing

The article still maintains the irritating “hallucination” excuse for LLM errors.Bellingcat tested LLMs abilities to do geolocation. Quite interesting article

Have LLMs Finally Mastered Geolocation? - bellingcat

We tasked LLMs from OpenAI, Google, Anthropic, Mistral and xAI to geolocate our unpublished holiday snaps. Here's how they did.www.bellingcat.com

(sorry, this really bugs me)

If these things are making ◊◊◊◊◊◊◊ mistakes, just admit it and stop the hallucination ◊◊◊◊◊◊◊◊.

Do these things ever perform and display error analysis of their results? Or do they, like ChatGPT, just bluster through with confident responses?

arthwollipot

Limerick Purist Pronouns: He/Him

It is the correct technical term for what is occurring. It's not just that the LLM is wrong about something. It is actively confabulating an unreal thing. I'm afraid you'll need to get over that particular peeve.The article still maintains the irritating “hallucination” excuse for LLM errors.

(sorry, this really bugs me)

If these things are making ◊◊◊◊◊◊◊ mistakes, just admit it and stop the hallucination ◊◊◊◊◊◊◊◊.

Yes. Specifically, certain LLMs used in research are programmed to analyse their own output.Do these things ever perform and display error analysis of their results? Or do they, like ChatGPT, just bluster through with confident responses?

AI hallucination is a pretty interesting subject, actually.

Blue Mountain

Resident Skeptical Hobbit

This. AIs are programmed to (almost) always give an answer, any answer, even it it's not correct. Very few times have I encountered a bot that simply up and says, "I'm sorry, but I don't have enough information in my training data to give an answer."The article still maintains the irritating “hallucination” excuse for LLM errors.

(sorry, this really bugs me)

If these things are making ◊◊◊◊◊◊◊ mistakes, just admit it and stop the hallucination ◊◊◊◊◊◊◊◊.

Do these things ever perform and display error analysis of their results? Or do they, like ChatGPT, just bluster through with confident responses?

However, I believe most AI bots have a disclaimer their output should not be trusted as completely accurate.

The Great Zaganza

Maledictorian

- Joined

- Aug 14, 2016

- Messages

- 30,008

LLMs are always hallucinating - hopefully most of the time in a way that is accurate enough for our needs

Blue Mountain

Resident Skeptical Hobbit

On another point, AI bots are very bad at analyzing input for subtle errors. For example, "How many days did this child live, who was born on March 30, 1883 and died on September 15, 1905, given that 1888, 1892, 1896, 1900, and 1904 were leap years?" The bot will happily compute the number of days, ignoring the fact that despite my claim 1900 was a leap year, it was not.

Pixel42

Schrödinger's cat

This. AIs are programmed to (almost) always give an answer, any answer, even it it's not correct. Very few times have I encountered a bot that simply up and says, "I'm sorry, but I don't have enough information in my training data to give an answer."

All AI programmers should read Asimov's The Last Question. "There is insufficient data for a meaningful answer".

It's a new area and new nomenclature is needed. Hallucinations are when they make stuff up not when they make a mistake. A mistake would be a response that there are 4 letter "r"s in strawberry when asked how many rs in the word strawberry, an hallucination would be when it says there are 4 and provides an apparent quote or reference to an OED entry that doesn't exist to support the answer 4.The article still maintains the irritating “hallucination” excuse for LLM errors.

(sorry, this really bugs me)

If these things are making ◊◊◊◊◊◊◊ mistakes, just admit it and stop the hallucination ◊◊◊◊◊◊◊◊.

Do these things ever perform and display error analysis of their results? Or do they, like ChatGPT, just bluster through with confident responses?

In humans we would say they are ◊◊◊◊◊◊◊◊◊◊◊◊ but prudish sites like this august venue would be in uproar to use such a term!

Seems that would be something they have in common with their creators...On another point, AI bots are very bad at analyzing input for subtle errors. For example, "How many days did this child live, who was born on March 30, 1883 and died on September 15, 1905, given that 1888, 1892, 1896, 1900, and 1904 were leap years?" The bot will happily compute the number of days, ignoring the fact that despite my claim 1900 was a leap year, it was not.