29.97

tfk is rounding up

,,,and PAL is 25 fps and cinema is 24 fps

My god. Spend about 30s (that's 30s +/- whatever you please) and look it up.

My TV (PAL) displays 50fps. Is yours in super-jerky-visionTM ?

29.97

tfk is rounding up

,,,and PAL is 25 fps and cinema is 24 fps

Such arrogance, yet still wrong!

Priceless!

Nope.Do you have a definitive assessment of the various video compression algorithms to which this video has been subject?

See above 'nope'.Do you know that none of those compression algorithms have employed earlier versions of "static motion elimination" thresholding, similar to h.264?

Nope.In order to determine "X ±Y seconds", you first need to know "A ±B foot" positional accuracy.

Incorrect.You've got your cart before your horse.

On the bright side, a couple of the animated gifs had bigfoot in them.

IIRC, femr2 says this because he believes he can utilize each feild as a full frame and thus refers to feilds as frames. Odd!

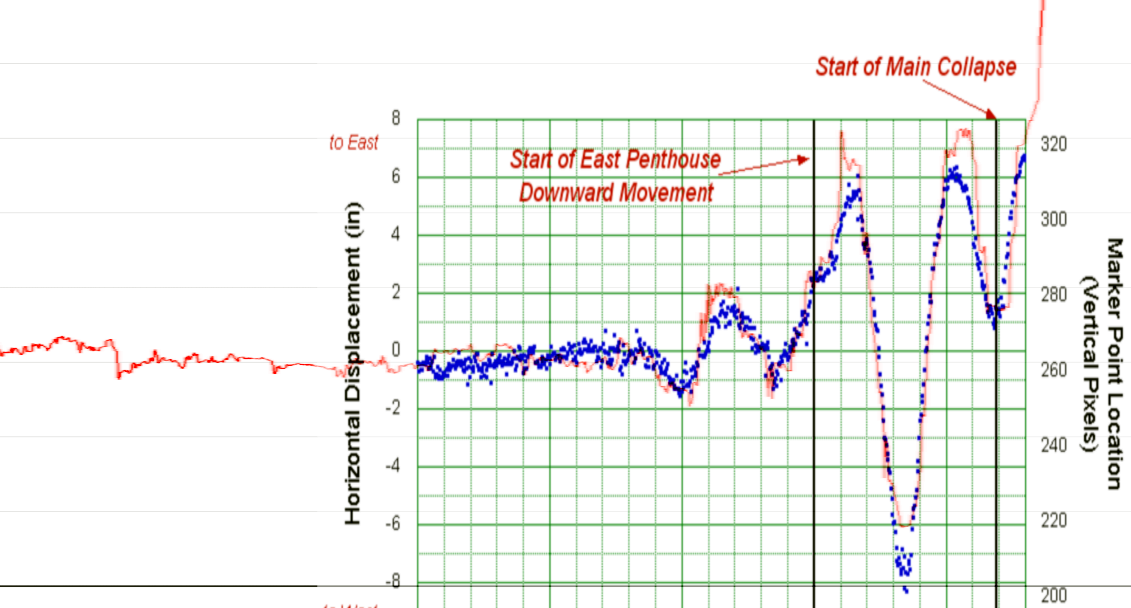

Well in that NIST puts this at 6 seconds and you have it at 7 seconds I see a potential difference in this of a full second which could either be because you have managed to deduce motion much more presisely than NIST OR you are out to lunch with your +/- 0.2 pixels OR your placement of t=0

However what does it matter? What are you trying to establish? That NIST was correct within a certain degree of accuracy in their FEA?

As I already said I , and every engineer worth the title, would already KNOW that the FEA will not be able to exactly mimic the actual collapse.

So, again, where are you going with this?

My god. Spend about 30s (that's 30s +/- whatever you please) and look it up.

My TV (PAL) displays 50fps. Is yours in super-jerky-visionTM ?

2.2 PAL (Phase Alternating Line)

This format is used particularly in Western Europe, Australia, New Zealand and in some areas of Asia. PAL uses altogether 625 scan lines, thereof approx. 575 are visible. The color subcarrier has a frequency of approx. 4.43 MHz in the case of analog PAL (complementary view). Special NTSC or PAL color subcarrier signals will not be transferred via connections like SCART (RGB) and YUV. Such a signal is only transferred via connections like Composite Video, RCA, FBAS and Y/C or S-Video (S-VHS) respectively. The refresh rate is 25 Hz or 25 fps respectively. This corresponds to 50 Hz (interlaced) or 50 fields/s respectively.

.........

....The lines will be written from the left to the right starting with line 1. The first half-image is represented by the odd lines whereas the second half-image consists of all even lines. If we put these half-images together we get 25 frames per second on PAL.

AGAIN, a field is NOT a frame. A FIELD is NOT a complete image.The i refers to interlaced, not progressive.

Each frame contains two separate images...called fields...which are separated and displayed sequentially.

AGAIN, a field is NOT a frame. A FIELD is NOT a complete image.

A complete image, raster, picture can only be produced using two (2, deux, one ananutter) fields.

In context...If you ask a grocer for a dozen eggs and you are given 6 eggs I suppose you will be satisfied you have received what you asked for?

In context...

What I'd be given is TWO separate boxes of eggs (two separate fields), with 6 eggs in each box, and they'd be given to me in a plastic bag container to keep them together (a frame).

One box could be Chicken eggs, the other could be Quail eggs.

It's important to keep your chicken separate from your quail of course.

An interlaced frame contains two separate images, from two entirely different points in time.

Whilst those two images are part of an interlaced frame, they are indeed termed fields of that frame. Upper and lower, or odd and even, referring to the order of scanline interlace.

When those two separate images are separated from the interlaced frame, they are indeed half height, but they are two separate images. Two separate points in time.

.

Incorrect.Except of course that you are trying (to extend the metaphor) make two 12 egg omlettes from two groups of 6 eggs.

Incorrect.A field is MISSING half of the image. Its not just missing half the resolution, its actually not scanned half the image, that half is in the next field.

Incorrect.If I snap a series of photos of a tree from top to bottom with each photo containing 1 vertical foot of the tree but I do not bother with every even numbered foot of tree I WILL NOT have a complete picture of the tree and simply cannot make precise predictions about the blank spots. If I then move back to the top and start again this time taking pics of only the even numbered intervals I am not going to be able to precisely predict what's happening in the odd intervals at this time.

You continue to treat each field as if its a complete image.

It simply isn't.

They are two images from two separate points in time.JEEBUS Kristoes

They are not "half height"!

They are images at full height with MISSING lines of information

Interlaced video with a frame-rate of 29.97 frames per second contains 59.94 half images (frames) per second, and you use them all to accomplish nothing to do with 911 CTs, no goals.

tfk said:You don't even know how to interpret your own results.

Care to provide an example ?

tfk said:You've posted your (over filtered) acceleration vs time graph.

Which one ?

From the man who wrote...

tfk said:Using difference equations,

v = (h2 - h1) / (t2 - t1).

the error in t2 - t1 is insignificant compared to the height error. (a good, real approximation, in this case).

If you have two points that are taken 1 second apart, and they measure equal heights, with an error of ±1 foot, then the error in the calculated velocity is

v = 0 ft/sec

V error = ± 1 ft/sec

If you maintain the very same height error, but your sampling rate is now 60 frames per second, then then

v = 0 ft/sec

V error = (±1 foot) / .0167 sec = ± 60 ft/sec.

+/- 60 ft/sec. Priceless.

PS I assume you'll not be using the data any more. That's fine. I assume you don't ascribe any validity to the graphs you've created from it. That's fine. I assume you'll be removing them and using data from Chandler instead.