GeeMack

Banned

- Joined

- Aug 21, 2007

- Messages

- 7,235

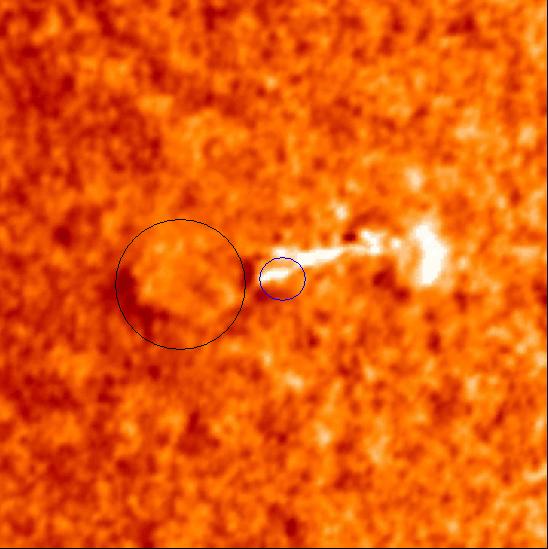

The previous question (Where did your "mountain ranges" go in Active Region 9143) also raises this question:

First asked 14 April 2010

Micheal Mozina,

You only cite one RD movie that shows "mountain ranges". We would expect that images of the Sun from any region when made into RD movies will magically reveal your "mountain ranges".

So you will have as a competent scientist tested this.

He only figured out how to make a running difference image this past weekend.

).

).